Megan Richardson is a Professor of Law at Melbourne Law School and a Chief Investigator in the ARC Centre of Excellence for Automated Decision-Making and Society. Mark Andrejevic is Professor of Media Studies at Monash University and Chief Investigator at the ADM+S Centre’s Monash Node. Jake Goldenfein is a law and technology scholar at Melbourne Law School and an Associate Investigator in the ARC Centre.

In January 2020, the New York Times published an exposé on Clearview AI, a facial recognition company that scrapes images from across the web to produce a searchable database of biometric templates.

A user uploads a 'probe image' of any person, and the tool retrieves additional images of that person from around the web by comparing the biometric template of the probe with the database.

It soon emerged that law enforcement agencies around the world, including Australian law enforcement, were using Clearview AI, often without oversight or accountability. A global slew of litigation has followed, challenging the company's aggregation of images, creation of biometric templates and biometric identification services.

Findings against the company mean that Clearview AI no longer offers its services in certain jurisdictions, including the UK, Australia and some US states.

But this has hardly stopped the company. Clearview is expanding beyond law enforcement into new markets with products such as Clearview Consent, offering facial recognition to commercial entities that require user verification (like finance, banking, airlines and other digital services).

Other companies are selling similar facial recognition products to the general public. It's clear the private sector has an appetite for these technical capacities: CHOICE has exposed how numerous Australian retailers build and deploy facial recognition for various purposes, including security and loss prevention.

Facial recognition and data privacy laws

The OAIC investigated Clearview AI in partnership with the UK Information Commissioner's Office, and determined in November last year that the company breached several of the Australian Privacy Principles (APPs) in the Privacy Act 1998. The Clearview AI determination is the most extensive consideration of facial recognition by the Office of the Australian Information Commissioner (OAIC) to date.

In Australia, the deployment of facial recognition is primarily governed by data privacy (or data protection) legislation that, while designed for different technologies, has been somewhat successful in constraining the use of facial recognition tools by private companies and Australian law enforcement.

The OAIC investigated Clearview ... and determined that the company breached several of the Australian Privacy Principles

But a closer look shows an awkward relationship between facial recognition and existing privacy law.

While the Australian Information Commissioner should be applauded for interpreting Australian law in ways that framed the use of facial recognition by Australian companies as privacy violations, it is unclear whether the reasoning would be applicable in other facial recognition applications, or would survive further examination by the courts if appealed.

In fact, the specifics of how facial recognition works, and is being used, challenge some of the basic ideas and functions of data privacy law.

Commission's finding reveals complex issues

A few aspects of the determination highlight the complexities in the relationship between data privacy law and facial recognition.

For Clearview AI to be subject to the APPs, it has to process "personal information". This means information about an identified or reasonably identifiable individual.

Clearview argued the images it collects by scraping the web are not identified, and that the biometric information it created from those images was not for the sake of identification, but rather to distinguish the people in photos from each other. It acknowledged that providing URLs alongside images may help with identification, but not always.

The Commissioner, however, found that because those images contained faces they were 'about' an individual. They were also reasonably identifiable because biometric identification is the fundamental service that Clearview provides. The biometric information (the templates) that Clearview extracted from those images was deemed personal information for similar reasons.

Collecting sensitive information without consent is permissible in cases of threat to life or public safety – those exceptions did not apply in this case

After finding that Clearview was processing personal information, the Commissioner evaluated whether Clearview was also processing "sensitive information", which is subject to stricter collection and processing requirements. That category includes "biometric information that is to be used for the purpose of automated biometric verification or biometric identification; or biometric templates".

The latter is generally understood to be the data collected for the sake of enrolment in a biometric identity system. Collection and processing of sensitive information is typically prohibited without consent under APP 3.3, subject to very narrow exceptions in APP 3.4.

While the Commissioner acknowledged that collecting sensitive information without consent is permissible in cases of threat to life or public safety, those APP 3.4 exceptions did not apply in this case.

Covert image collection and the risk of harm

The Commissioner was particularly concerned with this type of "covert collection" of images because it carried significant risks of harm. For instance, it created risks of misidentification by law enforcement, being identified for purposes other than law enforcement purposes, and created the perception for individuals that they are under constant surveillance.

Such collection was not lawful and "fair" (as required by APP 3.5) because:

- the individuals whose personal and sensitive information was being collected by Clearview would not have been aware or had any reasonable expectation that their images would be scraped and held in that database

- the information was sensitive

- only a very small fraction of individuals included in the database would ever have any interaction with law enforcement

- although it did have some character of public purpose – being a service used by law enforcement agencies – ultimately the collection was for commercial purposes.

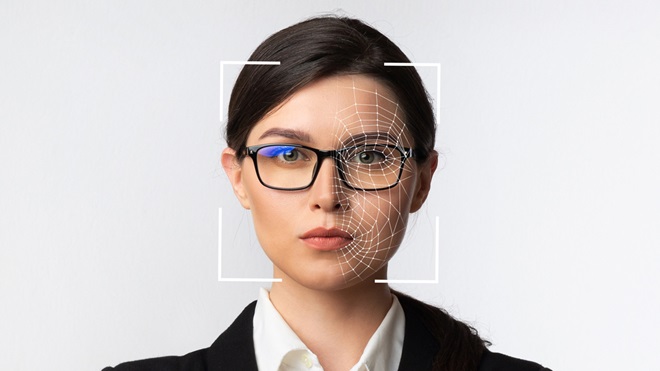

OPINION: "The specifics of how facial recognition works, and is being used, challenge some of the basic ideas and functions of data privacy law." [IMAGE: Clearview AI]

Is a facial image 'personal information'?

The Clearview AI case is a fairly egregious example of data processing in breach of the law. But the legal status of other facial recognition tools and applications is less clear.

For instance, it is not always clear that facial images collected will constitute personal information. A debate continues in other jurisdictions about the status of facial images and the scope of data protection law. The European Data Protection Board, for instance, was silent on this point in its 2019 advice on video information processing, and there have been conflicting interpretations in the literature.

A debate continues in other jurisdictions about the status of facial images and the scope of data protection law

Although there does appear to be some consensus that images from photography or video that are recorded and retained in material form should be considered personal information, this still requires that the person in an image be reasonably identifiable.

That was not controversial in the Clearview case because images were collected for the sake of building a biometric identification system, and its database of images and templates was retained indefinitely.

But some facial recognition technologies will anonymise or even delete images once biometric vectors are extracted, meaning there may no longer be a link between the biometric information and an image, making future identification more difficult.

The duration that material is retained also seems critical to any capacity for future identification.

The 'landmark' loophole

Further, not all biometric systems perform 'identification'. Some perform other tasks such as demographic profiling, emotion detection, or categorisation.

Biometric information extracted from images may be insufficient for future identification because instead of creating a unique face template for biometric enrolment, the process may simply extract 'landmarks' for the sake of assessing some particular characteristic of the person in the image.

If biometric vectors are just landmarks for profiling, and no image is retained, identification may be impossible. The European Data Protection Board has suggested such information, i.e. 'landmarks', would not be subject to the more stringent protections in the European General Data Protection Regulation for sensitive information.

This raises a question as to whether the collection of images for a system that did not lend itself to identification may not be personal information (let alone sensitive information) under the Privacy Act.

Nevertheless, these technologies are often used for different types of 'facial analysis' and profiling, for instance, inferring a subject's age, gender, race, emotion, sexuality or anything else.

Singled out at 7-Eleven

Before the Clearview AI determination, the OAIC considered 7-Eleven's use of facial recognition that touched on these questions more closely.

7-Eleven was collecting facial images and biometric information as part of a customer survey system. The biometric system's primary purpose was to infer the age and gender of survey participants. However, the system was also capable of recognising whether the same person had made two survey entities within a 20-hour period for the sake of survey quality control.

Here the Commissioner deemed the collection a breach of the APPs because there was not sufficient notice (APP 5), and collection was not reasonably necessary for the purpose of flagging potentially false survey results (APP 3.3).

7-Eleven argued that the images collected were not personal information because they could not be linked back to a particular individual, and therefore were not reasonably identifiable.

The Commissioner found that the images were personal information, however, because the biometric vectors still enabled matching of survey participants (for the sake of identifying multiple survey entries), and therefore that the collection was for the purpose of biometric identification.

This reasoning drew on the idea of 'singling out', which is when within a group of persons, an individual can be distinguished from all other members of the group.

Compared to the Clearview example, the finding here that images and templates were both personal information and sensitive information is somewhat less robust

In this case, the Commissioner also held that the facial images were themselves biometric information, in that they were used in a biometric identification system.

Compared to the Clearview example, the finding here that images and templates were both personal information and sensitive information is somewhat less robust.

There has been substantial critique of approaches to identifiability premised on 'singling out' because the capacity to single out says nothing really about the identifiability of the individual. Even the assignment of a unique identifier within the system is not enough to 'identify' a person – there would still need to be a way to connect that identifier with an actual person or civil identity.

Finding that information is personal because of its capacity to single out, but without necessarily leading to identification, may be a desirable interpretation of the scope of data protection, but it is not settled as a matter of law.

Singling out remains important because it still enables decisions that affect the opportunities or life chances of people – even without knowing who they are. But whether singling out, alone, will bring data processing within the scope of data protection law is yet to be unequivocally endorsed by the courts.

Considering the Australian Federal Court's somewhat parsimonious approach to the definition of personal information in the 2017 Telstra case, this finding might not hold up to juridical examination.

The TikTok settlement

The 2021 TikTok settlement under the US state of Illinois' BIPA (Biometric Information Privacy Act) looked at similar issues. There, TikTok claimed the facial landmark data it collected and the demographic data it generated, used both for facial filters and stickers as well as targeted advertising, was anonymous and incapable of identifying individuals. But the matter was settled and the significance of anonymity was not further clarified.

Identifying and non-identifying biometric processes

BIPA has no threshold requirement for personal information, and is explicitly uninterested in governing the images from which biometric information is drawn.

To that end, it may be that BIPA has a more catch-all approach to biometric information irrespective of application, which is different from the Australian and European approaches under general data protection law which clearly distinguish identifying and non-identifying biometric processes.

Lack of clarity on facial recognition law

As facial recognition applications further proliferate in Australia, we need more clarity on what applications are considered breaches of the law, and in what circumstances.

In the retail context, facial recognition used for security purposes is likely to be considered biometric identification because the purpose is to connect an image in a database of a known security threat to an actual person in a store.

But systems used exclusively for customer profiling may not satisfy those thresholds, while still enabling differentiated and potentially discriminatory treatment.

As facial recognition applications further proliferate in Australia, we need more clarity on what applications are considered breaches of the law, and in what circumstances

Where identification is clearly happening, what forms of notice and consent will satisfy the APPs also require further clarification. But ultimately, any such clarifications should occur after a debate as to whether these tools, be they for profiling or identification, by the private sector or government, are desirable or permissible at all.

These OAIC determinations expose a tension between using existing legal powers to regulate high-risk technologies, and advocating for more ideal law reform, as has occurred in the Privacy Act Review (currently ongoing).

One reform that might be considered is whether there should be a more specific approach to biometric information.

From our perspective, a regime focused on facial recognition or related biometrics may be more restrictive, and address some of the technology's specificities without getting caught up in whether images or templates are personal information.

And more precise rules for facial recognition could be part of a shift towards more appropriate regulation of the broader data economy.

We're on your side

For more than 60 years, we've been making a difference for Australian consumers. In that time, we've never taken ads or sponsorship.

Instead we're funded by members who value expert reviews and independent product testing.

With no self-interest behind our advice, you don't just buy smarter, you get the answers that you need.

You know without hesitation what's safe for you and your family.

And you'll never be alone when something goes wrong or a business treats you unfairly.

Learn more about CHOICE membership today

Stock images: Getty, unless otherwise stated.